I'm planning on doing a full write-up on cloud-based backup vendor CrashPlan, but in the interim I wanted to point out one of the biggest problems I have with cloud-based backups for home users. Despite paying a whopping $70.95 every month for "business class" cable Internet, I only get a paltry 3Mbps upload rate. I run a web server (including this blog), mail server, VPN server, and a variety of other services, so I can only dedicate about 1.5Mbps for backup of all of my systems.

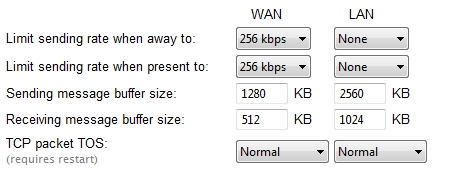

Since I back up two computers and four servers, I have to split the bandwidth between four systems. At the moment, I'm using the CrashPlan backup client's built-in throttling to limit each system to 256Kbps. It's not even remotely efficient, but I'm lazy and it is easy to configure.

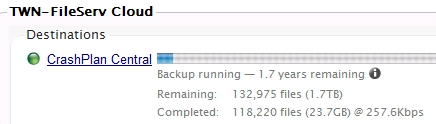

I have a file server that stores movies, music, books, TV shows, programs, and a variety of other digital media that I've acquired over the years. Due to storage constraints, it only takes up a paltry 1.7TB (although it is all compressed). So how long does it take to back up 1.7TB at 256Kbps? According to CrashPlan, about 1.7 years. That's one year per terabyte, which is about as ludicrous as it sounds.

This is a less substantial issue for the typical home user as they only care about their upload bandwidth when they're actually using their computer. Moreover, they traditionally have one or two devices to backup as opposed to the six I'm dealing with (and that's only the stuff getting backed up to the cloud). CrashPlan allows upload bandwidth to be limited on each system and offers an impressive degree of configurability. Limits can be set for both WAN bandwidth (for cloud-based backups or backups sent to a friend's computer) and LAN bandwidth (for backups to other systems in the home). I always keep LAN bandwidth unthrottled as I have no qualms about tearing up a few megabytes on a gigabit network. Notably, bandwidth can for both LAN and WAN can be configured for different rates if the computer is idle as opposed to one that is actively being used. The average user can probably leave their WAN uploads unthrottled if they aren't at their computer, but might want to throttle it to a reasonable rate when they're actively using their system.

The problem is a bit more complex for me, as I need to have at least 1-1.5Mbps available around the clock to handle incoming web requests, e-mail, VPN connections, etc. The fix for this problem is two-fold. First, I'll get a bit more technical with the throttling. I plan to use QoS at the network edge to limit all systems to a collective 1.5Mbps. I can then unthrottle each individual client, thus allowing "finished" systems to cede their bandwidth to systems that are still running. Once I get a chance to play around with this, I'll definitely write up a how-to along with my impressions.

The second option is a bit more pricy. For an extra $40 per month, I can jump to the next bandwidth tier and increase my upload speed by 133%. Although jumping from 3Mbps to 7Mbps might not sound substantial, it would allow me to throw an extra 2Mbps of upload speed to the file server. By increasing the upload bandwidth nine-fold, I can drop the upload time from 1.7 years to 9 weeks, a much more manageable sum. I think an extra $40 per month is worth the peace of mind that comes with having a complete cloud backup of every system I own.

Comments